What Is Text-to-SQL?

Text-to-SQL enables users to query databases using natural language, making data access seamless for business users, analysts, and developers. By converting plain English into Structured Query Language (SQL), this technology removes the barriers of coding and empowers non-technical users to extract actionable insights from complex datasets.

This capability breaks down traditional barriers to data access for non-technical employees to retrieve and analyze data without having to rely on help from others. For example, a marketing analyst might ask, “What are the top five products that sold last quarter in North America?” and text-to-SQL will translate that into an SQL query, execute it against the company’s database, and return the results-all within seconds.

A Technology Decades in the Making

Text-to-SQL isn’t new. It originated in the 1990s, evolving from rule-based systems to AI-powered solutions capable of handling real-world complexity. Now, with generative AI (GenAI), text-to-SQL has become indispensable for modern businesses aiming to streamline data queries.

GenAI: A Game Changer for Text-to-SQL

Generative AI has supercharged text-to-SQL by enabling it to handle the nuances of human language and the complexities of modern data systems. Large language models (LLMs) like GPT have revolutionized the way natural language processing (NLP) is applied to database queries.

This leap in capability is driving adoption across industries, particularly for organizations looking to enhance their retrieval-augmented generation (RAG) workflows. By grounding LLMs in real-time, context-rich corporate data, businesses can create AI applications that deliver accurate, actionable insights.

The Role of Text-to-SQL in RAG

Traditionally, many organizations have relied on vector databases to store embeddings of their data, enabling LLMs to retrieve relevant information based on semantic similarity. This approach works well for static or slowly changing data, such as product documentation or knowledge bases. However, for operational data that is constantly changing-like inventory levels, real-time sales figures, or live customer interactions-vectorizing data continuously becomes impractical. The embeddings can quickly become outdated, leading to inaccurate or irrelevant responses.

Text-to-SQL addresses this challenge by generating SQL queries on-the-fly, enabling LLMs to access the most current data directly from live databases without requiring precomputed embeddings. This enables responses based on the latest information, making it ideal for applications that require real-time data access.

Pros and Cons of Embedding All Data in the Vector Databases

Pros:

- Works well for structured and unstructured data

Cons:

- Limited security features

- Requires copying all datasets into the vector database

- Complex to synchronize data changes

For example, a customer support chatbot powered by RAG can answer complex questions like, “What’s the delivery status of my last three orders?” The bot uses text-to-SQL to fetch order data from the backend, so the response is accurate and contextualized.

This ability to ground AI in trusted enterprise data is critical for minimizing issues like AI hallucinations and regulatory compliance, making text-to-SQL a cornerstone of next-generation AI solutions.

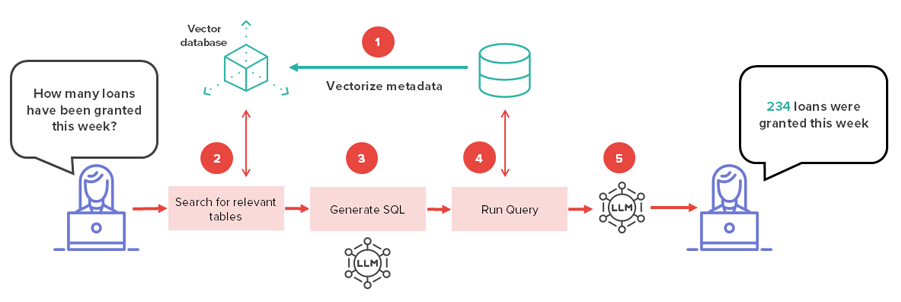

Text-to-SQL

Text-to-SQL enhances retrieval-augmented generation (RAG) workflows by dynamically generating SQL queries. Unlike traditional approaches that rely on embedding entire datasets in vector databases, text-to-SQL focuses on vectorizing metadata. This enables real-time access to live data while maintaining high accuracy and reducing the need for data duplication.

Steps Involved:

- The metadata related to available tables, fields, etc. is embedded in the vector database.

- Based on the prompt (question asked) the relevant table(s) necessary to respond to the prompt is/are identified.

- An LLM is used to generate SQL that can retrieve accurate, real-time data.

- The SQL query retrieves data from corporate systems, keeping the AI model “grounded” in up-to-date, reliable information. The database system's security returns only data the user has access to.

- The LLM synthesizes a response, combining the retrieved data with its natural language generation capabilities.

Pros and Cons of Text-To-SQL

Pros:

- Access to fresh data

- Advanced security managed at the database level

Cons:

- Requires all data to be in one place

- Limited to structured data

Challenges of Text-to-SQL in Real-World RAG Applications

While text-to-SQL offers significant promise, moving from a proof-of-concept (POC) to enterprise-wide deployment introduces several challenges. These arise from the complexities of distributed data landscapes and the need for consistent, real-time access to diverse systems:

- Distributed Data: Data resides across multiple platforms-on-premises databases, cloud data lakes, and SaaS applications-each with unique access methods and storage formats.

- Source Selection: Identifying the best system to answer a query becomes complex when data overlaps or is spread across multiple sources.

- SQL Dialects: Different systems use a variety of query languages, requiring translation and adaptation for each data source.

- Metadata Variability: Inconsistent metadata structures between systems make standardizing what gets vectorized difficult, impacting query accuracy.

- Data Freshness: Basing responses on the latest data is challenging when sources update at different intervals.

- Authorization and Access: Queries must respect user permissions, so only authorized data is retrieved across all systems.

- Security Consistency: Enforcing uniform security policies across distributed data sources is essential to avoid vulnerabilities.

Addressing these challenges is critical to moving beyond POCs and achieving scalable, enterprise-ready text-to-SQL implementations. Success requires balancing flexibility, accuracy, and security while navigating the complexities of today’s distributed data environments.

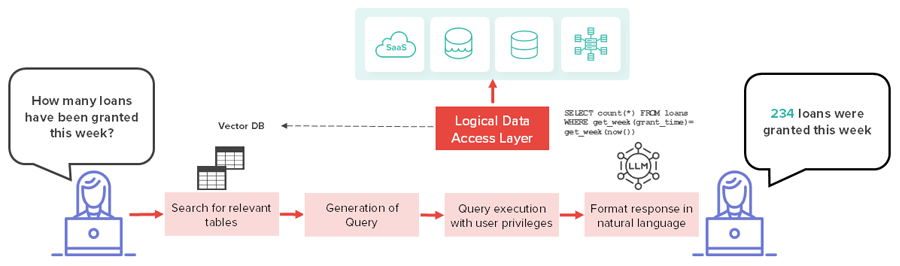

Query RAG: Simplifying Text-to-SQL with a Logical Data Abstraction Layer

(Add another callout: "The success of implementing agentic analytics will depend on having both a well-defined semantic layer and metadata, as well as discoverable and reusable data products and API services." - Gartner - Augment D&A Workflows With Agentic Analytics, 15 October 2024- ID G00820055.) Query retrieval-augmented generation (Query RAG) is an emerging approach that addresses the challenges of implementing text-to-SQL in distributed data landscapes. By leveraging a logical data abstraction layer, Query RAG simplifies data access and establishes consistency, enabling AI-driven insights.

A logical data abstraction layer sits atop various physical data sources, creating a unified view of distributed data.

This abstraction layer:

- Centralizes Access: LLMs interact with a single virtual layer that abstracts underlying data sources.

- Standardizes Data Representation: Establishes a common format, eliminating issues with varying SQL dialects and metadata inconsistencies.

- Embeds Business Context: Maintains enriched semantics, including definitions and relationships.

- Ensures Consistent Security: Applies uniform policies and controls.

By aligning data with business semantics and abstracting technical complexities, Query RAG empowers organizations to deliver reliable, real-time insights through LLMs. This approach not only improves the accuracy of AI responses but also fosters smarter decision-making and better user experiences. Query RAG, powered by a logical data abstraction layer, represents a transformative shift in how enterprises ground AI in distributed, ever-changing data landscapes.

Getting Started with Query RAG

To explore how Query RAG simplifies text-to-SQL in distributed data landscapes, Denodo Express provides an ideal starting point. This free version of the Denodo Platform creates a unified data access layer, seamlessly integrating with distributed data sources and abstracting complexity.

Bundled with an AI SDK, Denodo Express enables developers to quickly build GenAI-powered agents or chatbots using streamlined REST APIs. These tools ground AI models in real-time, trusted enterprise data protected by robust security and governance.

With Denodo Express, you can easily experiment with Query RAG and experience firsthand how it transforms distributed data access and AI-driven insights.